Start Here

Published:

Published:

Published:

Published:

2021 #Content/Paper by Nelson Elhage, Neel Nanda, Catherine Olsson, Tom Henighan, Nicholas Joseph, Ben Mann, Amanda Askell, Yuntao Bai, Anna Chen, Tom Conerly, Nova DasSarma, Dawn Drain, Deep Ganguli, Zac Hatfield-Dodds, Danny Hernandez, Andy Jones, Jackson Kernion, Liane Lovitt, Kamal Ndousse, Dario Amodei, Tom Brown, Jack Clark, Jared Kaplan, Sam McCandlish, and Chris Olah. There’s also an accompanying YouTube playlist here and walkthrough by Neel Nanda here

Note: traditional attention was first used as a mechanism to improve the performance of RNNs. The Attention is All You Need paper proposed self-attention as a complete replacement for the RNN itself, which has subsequently proven to be more performant as well as faster due to the lack of reliance on sequential processing.

2024 ICML #Content/Paper by Alex Tamkin, Mohammad Taufeeque, and Noah D. Goodman.

One of three approaches to alleviating superposition suggested in Anthropic’s toy models blog post, which has a long history in the sparse coding literature and may even be a strategy employed by the brain.

2022 #Content/Paper by Sid Black, Lee Sharkey, Leo Grinsztajn, Eric Winsor, Dan Braun, Jacob Merizian, Kip Parker, Carlos Ramón Guevara, Beren Millidge, Gabriel Alfour, and Connor Leahy.

That monosemantic concepts are represented in neural networks as directions (vectors) in activation space, that conjunctions of concepts are represented as linear combinations of these vectors, and that we can understand the computations done by models between layers as nonlinear operations on them.

Embodies a fundamental belief that neural networks are only black boxes by default, and that we can understand their function in terms of interpretable concepts and algorithms if we try hard enough.

2024 #Content/Paper by Joshua Engels, Isaac Liao, Eric J. Michaud, Wes Gurnee, and Max Tegmark.

2024 #Content/Blog by Anthropic.

2022 #Content/Blog by Nelson Elhage, Tristan Hume, Catherine Olsson, Nicholas Schiefer, Tom Henighan, Shauna Kravec, Zac Hatfield-Dodds, Robert Lasenby, Dawn Drain, Carol Chen, Roger Grosse, Sam McCandlish, Jared Kaplan, Dario Amodei, Martin Wattenberg, and Chris Olah.

The term “transformer” doesn’t have a fully precise definition, but in general is used to refer to any neural sequence-to-sequence model where the only interaction between positions is through a sequence of multi-head attention layers that iteratively update a central embedding called a residual stream by addition.

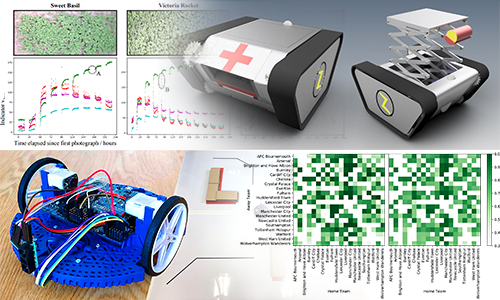

Assessed work from BEng and MSc degrees

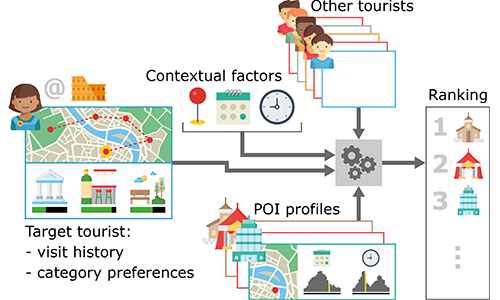

Harnessing Social Data for Travel Recommendation

A minimal Hanabi emulator, written in pure Python.

Published in , 2018

Exerpt.

Recommended citation: Bewley, Tom. "Use of Machine Vision and Intelligent Data Processing Algorithms to Monitor and Predict Crop Growth in Vertical Farms." BEng Thesis, University of Bristol, 2018. https://bit.ly/2MHrhWb

Published in 2019 International Conference on Robotics and Automation (ICRA), 2019

A theoretical framework for embedding robotics problems into video games, enabling distributed control by a crowd of players.

Recommended citation: Bewley, Tom, and Liarokapis, Minas. "On the Combination of Gamification and Crowd Computation in Industrial Automation and Robotics Applications." 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019. /files/gamification.pdf

Published in , 2019

Exerpt.

Recommended citation: Bewley, Tom. "On Tour: Harnessing Social Tourism Data for City and Point-of-Interest Recommendation." MSc Thesis, University of Bristol, 2019. /files/msc-thesis.pdf

Published in DSRS-Turing 2019: 1st International ‘Alan Turing’ Conference on Decision Support and Recommender Systems, 2019

A data-driven recommender system for tourism.

Recommended citation: Bewley, Tom, and Carrascosa, Ivan Palomares. "On Tour: Harnessing Social Tourism Data for City and Point of Interest Recommendation." 1st International ‘Alan Turing’ Conference on Decision Support and Recommender Systems (DSRS-Turing 2019). 2019. https://www.researchgate.net/profile/Tom_Bewley2/publication/338581229_On_Tour_Harnessing_Social_Tourism_Data_for_City_and_Point_of_Interest_Recommendation/links/5e1dd8e6458515d2b46d3eb6/On-Tour-Harnessing-Social-Tourism-Data-for-City-and-Point-of-Interest-Recommendation.pdf

Published in arXiv, 2020

Describing the various ways of training and evaluating white box approximations of black box policies, and how these can be in conflict.

Recommended citation: Bewley, Tom. "Am I Building a White Box Agent or Interpreting a Black Box Agent?" arXiv preprint 2007.01187. 2020. https://arxiv.org/abs/2007.01187

Published in Trustworthy AI - Integrating Learning, Optimization and Reasoning (also 1st TAILOR Workshop at ECAI 2020), 2020

Introducing I2L and preliminary results.

Recommended citation: Bewley T., Lawry J., Richards A. (2021) Modelling Agent Policies with Interpretable Imitation Learning. In: Heintz F., Milano M., O'Sullivan B. (eds) Trustworthy AI - Integrating Learning, Optimization and Reasoning. TAILOR 2020. Lecture Notes in Computer Science, vol 12641. Springer, Cham. https://link.springer.com/chapter/10.1007%2F978-3-030-73959-1_16

Published in 35th AAAI Conference on Artificial Intelligence (AAAI 2021), 2020

Introducing a new decision tree model of black box agent behaviour, which jointly captures the policy, value function and temporal dynamics.

Recommended citation: Bewley, Tom and Lawry, Jonathan. "TripleTree: A Versatile Interpretable Representation of Black Box Agents and their Environments" 35th AAAI Conference on Artificial Intelligence (AAAI 2021). 2021. https://arxiv.org/abs/2009.04743

Published in 21st International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2022), 2021

An online, active learning algorithm that uses human preferences to construct reward functions with intrinsically interpretable, compositional tree structures.

Recommended citation: Bewley, Tom and Lecue, Freddy. "Interpretable Preference-based Reinforcement Learning with Tree-Structured Reward Functions" Proc. of the 21st International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2022). 2022. http://arxiv.org/abs/2112.11230

Published in Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS 2022), 2022

We generalise reward modelling for reinforcement learning to handle non-Markovian rewards, and propose new interpretable multiple instance learning models for this problem.

Recommended citation: Early, Joseph, Tom Bewley, Christine Evers, and Sarvapali Ramchurn. "Non-Markovian Reward Modelling from Trajectory Labels via Interpretable Multiple Instance Learning" Proc. of the Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS 2022). 2022. https://arxiv.org/abs/2205.15367

Published in XAI-IJCAI22 Workshop, 2022

A data-driven, model-agnostic technique for generating a human-interpretable summary of the salient points of contrast within an evolving dynamical system.

Recommended citation: Bewley, Tom and Lawry, Jonathan and Richards, Arthur. "Summarising and Comparing Agent Dynamics with Contrastive Spatiotemporal Abstraction" XAI-IJCAI22 Workshop. 2022. https://arxiv.org/abs/2201.07749

Published in arXiv, 2022

We show that reward learning with tree models can be competitive with neural networks, and demonstrate some of its interpretability benefits.

Recommended citation: Bewley, Tom, Jonathan Lawry, Arthur Richards, Rachel Craddock, and Ian Henderson. "Reward Learning with Trees: Methods and Evaluation" arXiv preprint 2210.01007. 2022. https://arxiv.org/abs/2210.01007

Published in AIAA SciTech Forum, 2023

We show that reward learning with tree models can be competitive with neural networks in an aircraft handling domain, and demonstrate some of its interpretability benefits.

Recommended citation: Bewley, Tom, Jonathan Lawry, and Arthur Richards. "Learning Interpretable Models of Aircraft Handling Behaviour by Reinforcement Learning from Human Feedback" AIAA SciTech Forum. 2023. https://arxiv.org/abs/2305.16924

Published in Seventh Workshop on Generalization in Planning, 2023

We propose methods for improving the performance of zero-shot RL methods when trained on sub-optimal offline datasets.

Recommended citation: Jeen, Scott and Tom Bewley and Jonathan M. Cullen. "Conversative World Models" Seventh Workshop on Generalization in Planning. 2023. https://openreview.net/pdf?id=6oPZcdzFjW

Published in , 2024

Exerpt.

Recommended citation: Bewley, Tom. "Tree Models for Interpretable Agents." PhD Thesis, University of Bristol, 2024. https://research-information.bris.ac.uk/en/studentTheses/tree-models-for-interpretable-agents

Published in International Conference on Machine Learning (ICML), 2024

A model-agnostic method for learning local and global counterfactual explanations in the form of generalised rules.

Recommended citation: Bewley, Tom, Salim I. Amoukou, Saumitra Mishra, Daniele Magazzeni, and Manuela Veloso. "Counterfactual Metarules for Local and Global Recourse" International Conference on Machine Learning (ICML). 2024. https://arxiv.org/abs/2405.18875

Published:

As it stands I’m precisely 13 days into my PhD, which means a lot of reading, and I thought I’d kick this blog off with a weekly rolling ‘diary’ of things I read, watch and otherwise consume which may have some influence on my PhD topic. Most of the papers have words pertaining to explanation in there, and that’s because I did a massive scrape of papers with that keyword. I figured that would be a reasonable start.

Published:

Approximately three weeks in, I’m starting to work on a case study project that will allow me to explore some of the key ideas around multi-agent explainability – collision avoidance within a population of autonomous vehicles on road / track networks. As a result, more of my reading this week has focused specifically on the multi-agent context.

Published:

This week didn’t involve very much reading since I focused instead on my practical investigation of the traffic coordination problem. Nonetheless, I encountered a variety of fascinating ideas.

Published:

Decision trees for state space segmentation; lightweight manual labelling as a ‘seed’ for interpretability; the dangerous of homogenous distributed control; AI and the climate crisis.

Published:

The theory of why-questions; fidelity versus accuracy; trees and programs as RL policies; partially-interpretable hybrids.

Published:

Modelling other agents; DAGGER; evaluating feature importance visualisations; self, soul and circular ethics.

Published:

Meta learning causal relations; decomposing explanation questions; misleading explanations; the critical influence of metrics.

Published:

State representation learning; emotions and qualitative regions for heuristic explanation; causal reasoning as a middle ground between statistics and mechanics; deep learning and neuroscientific discovery.

Published:

Model extraction; world models and representations; a MAS taxonomy.

Published:

State representation learning in Atari; AI shortcuts and ethical debt; cloning swarms.

Published:

Distillation and cloning; onboard swarm evolution; The Mind’s I chapters.

Published:

Goal hierarchies as rule sets; mutual information and auxiliary tasks for representation learning; model-based understanding.

Published:

Theory-of-mind as a general solution; factual and counterfactual explanation; semantic development in neural networks; cloning without action knowledge; intuition pumps.

Published:

Integrating knowledge and machine learning; folk psychology and intentionality; soft decision trees; conceptual spaces.

Published:

Confident execution framework; explananda as differences; online decision tree induction; hybrid AI design patterns.

Published:

Imitation by coaching; GAIL; human-centric vs robot-centric; DeepMimic.

Published:

Using $Q$ for imitation; differentiable decision trees and their application to RL; interactive explanations with Glass-Box.

Published:

Formalising interpretation and explanation; operationally-meaningful representations; Conceptual Spaces book.

Published:

Symbols and cognition; robust AI through hybridisation; causal modelling via RL interventions; environment as an engineered system.

Published:

Rule-based regularisation; DeepSHAP for augmenting GAN training; image schemas as conceptual primitives; imitating DDPG with a fuzzy rule-based system.

Published:

Constraining embeddings with side information; latent actions; RL with abstract representations and models; fuzzy state prototypes; index-free imitation.

Published:

Explanation-based tuning; saliency maps for vision-based policies; RL with differentiable decision trees.

Published:

Explanatory debugging; latent canonicalisations; the perceptual user interface; automatic curriculum learning.

Published:

Trustworthy AI; unifying imitation and policy gradient; soft decision trees; SRL with dimension specialisation.

Published:

Imitation learning using value or reward.

Published:

Terminological quagmires; (mis)interpreting interpolation; interaction is paramount.

Published:

PechaKucha talk introducing the motivation behind my PhD topic, as part of a one-day conference on socially-responsible AI.

Published:

Talk presenting an overview of my MSc thesis project, as part of the 1st International ‘Alan Turing’ conference on Decision Support and Recommender Systems.

Published:

Talk presenting an overview of my PhD research so far, as part of a one-day conference showcasing student work within the School of Computer Science, Electrical and Electronic Engineering, and Engineering Maths (SCEEM) at the University of Bristol.

Published:

Presentation of my paper of the same name, which can be found here.

Published:

Recording of a talk delivered to fellow Bristol researchers on 18/01/21, as part of a one-day symposium on Combining Knowledge and Data.

Undergraduate course, Faculty of Engineering, University of Bristol, 2019

Technical assistance for second year concept generation and MATLAB modelling project.

Undergraduate course, Faculty of Engineering, University of Bristol, 2019

Technical assistance for fourth year research and modelling activities, which form the first half of the centrepiece two-year group design project in the latter half of the Engineering Design programme.